This is reposted from a blog I wrote for Systems Watch

Today Amazon Web Services experienced multiple degradations of service in one availability zone in Virginia region (us-east-1), which caused sites like Reddit, Netflix, and many more sites to lose service for several hours. Most posts and comments on the topic on Twitter or various articles bash developers or systems folks for being architected in a single zone. We have spoken and experienced first hand that a lot of companies regardless of being architected in multi-availability zones have been affected by a so called AWS single zone issue.

How is this possible? One of the most common complaints is that the glue that holds Amazon Web Services together is their management API stack. Some use the literal programmatic API libraries, others maybe AWS Management Console, and some RightScale, however they all are layers built on the AWS API Platform and when that goes all bets are off.

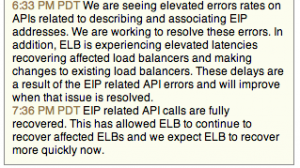

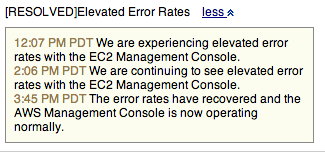

AWS API Errors and AWS Management Console Errors

The problem scenario occurs when you have an architected system across two or more AWS availability zones in Virginia (us-east), one of the biggest and oldest regions of AWS, and AWS has a outage in one zone specifically in that region.

The waterfall effect seems to happen, where the AWS API stack gets overwhelmed to the point of being useless for any management task in the region. Our guess is that either through AWS and client automated failover/scale up or due to the outage itself the API stack cannot handle the request load of all those affected, which leaves AWS users in the region unable to fail-over or increase resources to an unaffected zone.

Typically your organization cannot afford to have 100% capacity redundancy sitting idle all the time, it becomes a money issue. So if you have your resources in two zones with a 50/50 split, and you lose one zone 50% of your resources cannot handle 100% of the load causing degradation of the organizations services. To remedy this whether through automation or manual intervention you spin up the lost 50% of resources in a different unaffected zone to meet your demand.

This is where the problem often occurs when you can’t manage anything in AWS because their API’s are unavailable, you can’t spin up the lost 50% in an unaffected zone and are in big trouble and helpless. In a perfect world organizations are making so much money they have enough resources in one of the two zones to carry the entire load or better yet in multiple regions with mirrored capacity but most of the time this isn’t the case. This is often because management, not engineering, is willing to take the risk. So organizations feel that degradation is not a full outage and having 50% is better than 0% available resources. To the end user they are angry either way.

So as an AWS client when you lose all control to fail-over, scale up, or rebuild into a healthy zone and any redundancy you have is overwhelmed you have to wait hours for AWS to recover API service in order to gain any control and recover your resources.

This is our opinion of how an AWS outage can cause issues even when you’re in Multi-Availability Zones.

Also a great resource for free Realtime AWS uptime data is Systems Watch